Contents

Data is a precious treasure for human life in the Information Age. As data comes from enormous sources, most organizations have recently struggled with data integration and governance. As a result, each company needs an ultimate solution to solve this problem and enhance its business performance. This blog will present the general approaches to matching these tasks.

Data warehouse

First, let’s explore the definition and key characteristics of data warehouse.

Definition

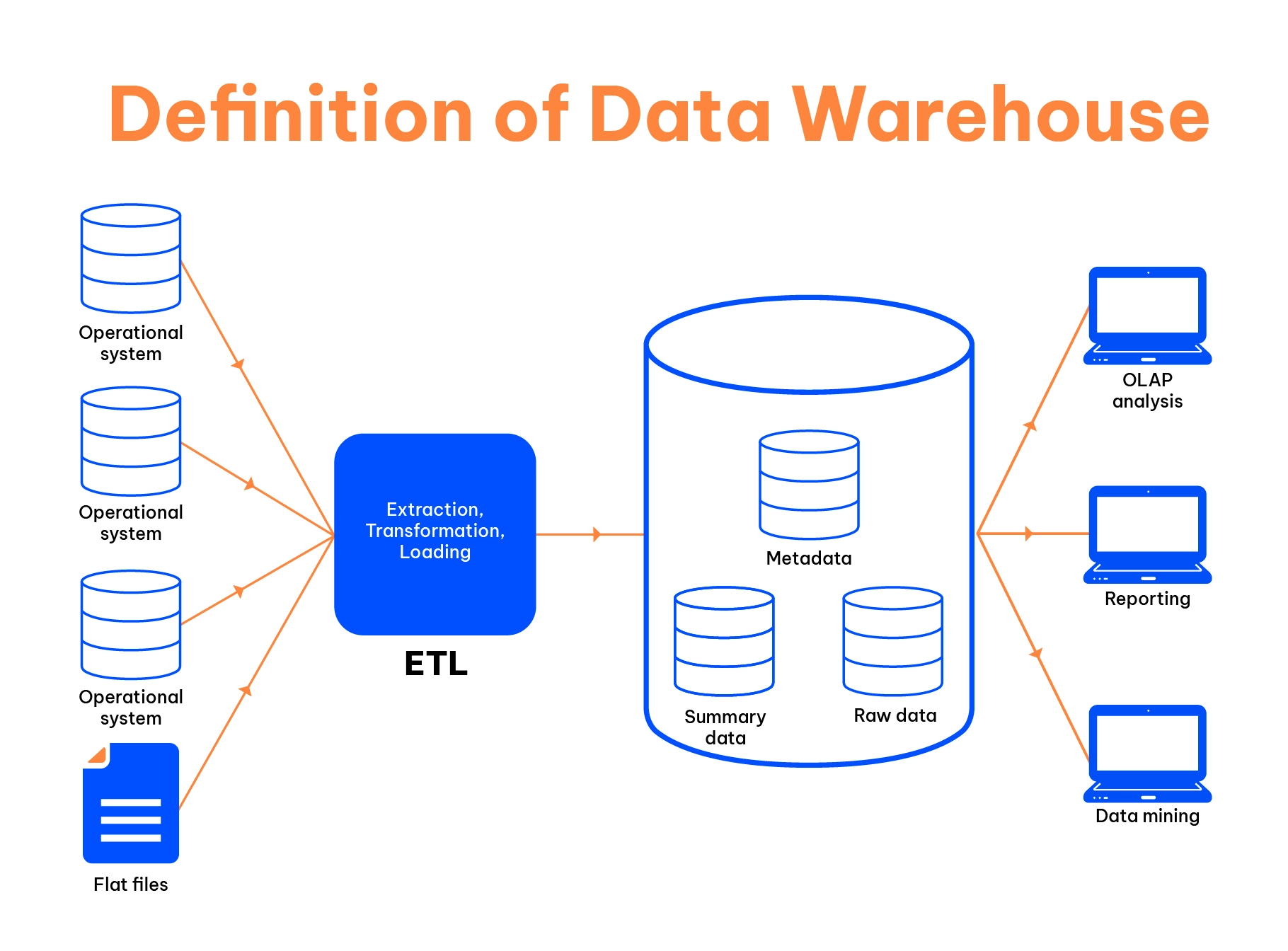

A data warehouse is a central repository for storing transformed data. Since the data is stored, it can not be changed in the data warehouse. Various types of data, such as structured, semi-structured, or unstructured inputs, will be converted by the ETL process before entering the data warehouse. The main functions of the data warehouse are to analyze historical data and run queries.

Data warehouse characteristics

A data warehouse is considered a data management system that assists in making decisions. It has four distinct characteristics, as follows:

Subject-oriented

A data warehousing process is designed to resolve a specific theme such as sales, distribution, or marketing instead of the organization’s existing operations. It focuses on making decisions on a particular subject by modeling the data and eliminating irrelevant or redundant information.

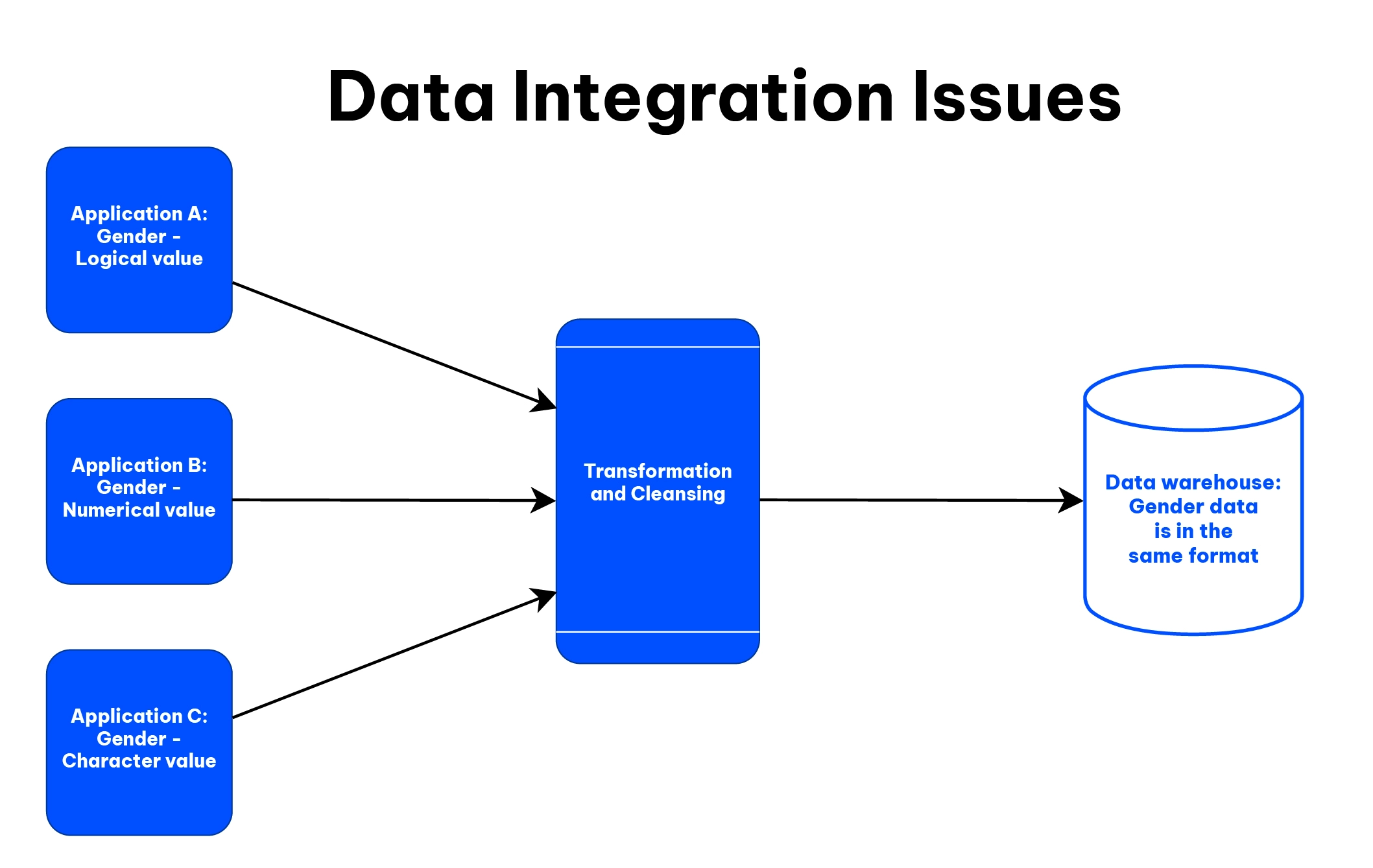

Integrated

Data from numerous sources, including mainframes, flat files, and relational databases, is integrated to develop the data warehouse. This data warehouse integration establishes a standard format for all similar data derived from the different databases and helps with proper data analysis. The critical point is to ensure the reliability of the name conventions—scaling, encoding structure, etc.

For instance, we have three different applications labeled A, B, and C with information about gender, date, and balance. However, in each application, the data on genders is stored in different formats, as shown in the figure above.

- Application A: gender data is a logical value.

- Application B: gender data is a numerical value.

- Application C: gender data is a character value.

After the processing and cleansing process, the gender data from the three applications are converted to the same format in the data warehouse.

Time-variant

Time variance is a distinct feature, indicating that data warehouses are consistent within a period, meaning that the data warehouse is loaded daily, hourly, or on some other periodic basis, and does not change within that period. This feature sets DWs apart from operational systems which store daily business transaction records and can only keep up-to-date information.

As seen in Fig 1, the operational system is considered the original source of data before loading it into the data warehouse. The past data in the data warehouse is queried and analyzed across a given period. Whereas, in transactional systems, old data is either moved or deleted.

For example, a customer bought products from your company. She lived in Melbourne, Australia, then moved to Sydney from 2008 to 2013. Currently, she has settled down in Paris, France, since July 2014.

Your business could use a data warehouse to look through all past addresses of the customer, but the transactional system only updates the current address.

Non-volatile

As data archived in the data warehouse is permanent, it is not erased or deleted if new data is entered. Data is refreshed at predetermined times and is read-only, which is useful for examining and analyzing historical data.

Transactional processing, recovery, and concurrency control techniques are not required. In a data warehouse environment, functions like deleting, updating, and inserting performed in an operational application are excluded. Instead, there are two different types of data operations:

- Data Loading

- Data Access

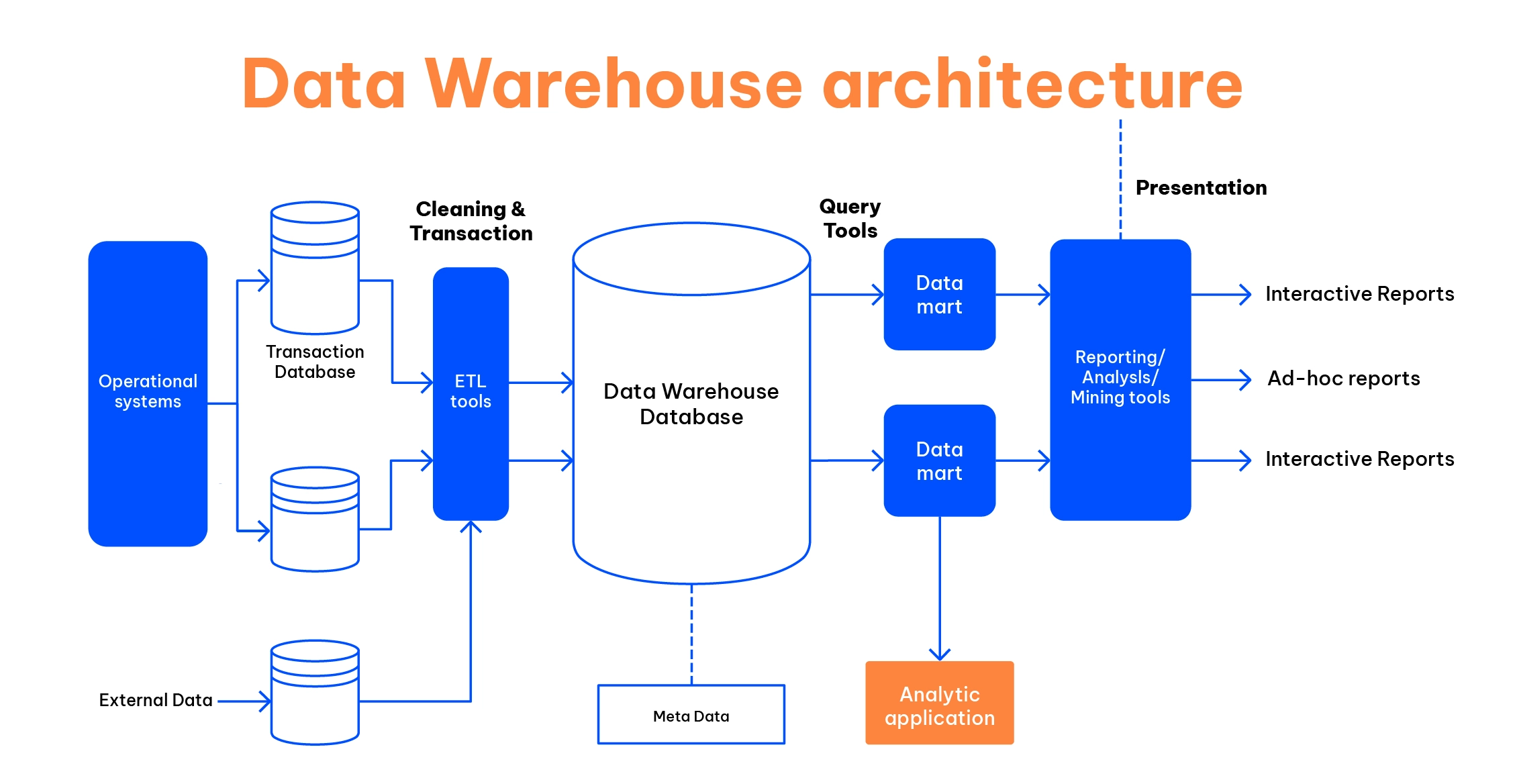

Data warehouse architecture

Modern data warehouses need “data warehouse architecture” as the design and building blocks to store, clean, and organize data properly. The main purpose of the data warehouse architecture is to determine which approaches are the most effective for consolidating corporate information from diverse data sources to support customized queries, business analysis, and decision-making.

According to the data warehouse model below, there are five main components in the process, including:

- Data warehouse access tool (ETL tools)

- Central database (Data Warehouse Database)

- Metadata

- Query tools

- Data warehouse bus

However, the components may vary according to the enterprises’ requirements and conditions.

Data warehouse access tools (ETL tools)

ETL tool draws out data from original sources and then converts it into a standard format before entering the data warehouse.

The tasks of these ETL tools:

- Removing irrelevant data from operational databases before loading them into the data warehouse

- Calculating summaries and data extracted

- Put default values in place for any missing data

- De-duplicate repeated data derived from multiple original sources

- Detect and substitute common names and definitions for data derived from different sources.

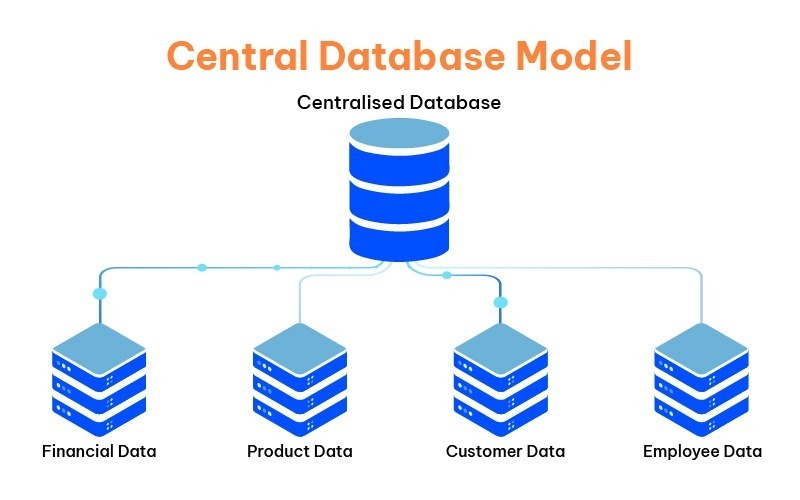

Central database

A central database is a database that archives all organizational data and makes it available for analytics and reporting. The key point is that the company has to determine which types of databases are appropriate for storing information in the data warehouse.

Recently, many enterprises have chosen relational databases as a central database to build a grounded foundation. Today, relational databases are increasingly being utilized for business intelligence, data integration, and data warehousing thanks to the advancement of relational technologies.

To support relational databases in business intelligence, various alternative technologies are replacing them in many parts of the technical architecture. Some prevalent technologies include:

- OLAP databases

- Massively parallel processing (MPP) databases

- Data virtualization

- In-database analytics

- In-memory analytics

- Cloud-based BI, DW, or data integration

- NoSQL databases

Metadata

Metadata is “data about data,” created, transformed, stored, accessed, and exploited in enterprises. Metadata determines how data is modified and processed in the data warehouse. It also clarifies the data’s origin, usage, values, and features.

To handle metadata effectively, it is important to understand what the data represents, where it was extracted, how it was transformed, and so on to deliver consistent, complete, validated, clean, and up-to-date data for business analytics.

An example of how Metadata converts raw data into valuable knowledge:

Raw data: 2501 TK019 09.08.22

- Model number: 2501

- Sales agent ID: TK019

- Date: 09/08/2022

Two types of Metadata are Technical and Business metadata.

Technical data: the data is processed by software tools and is commonly used by data warehouse designers and administrators. For example:

- ETL tools specify fields and mappings between source and targets, transformations, and workflows.

- Databases scrutinize columns (format, size, etc.), tables, and indexes.

- BI tools interpret fields and report them.

Business data: the data offers an easier way for end-users to understand a particular data set stored in the data warehouse. For example, the business context for reporting on weekly sales, inventory turns, or budget variances is business data.

Query tools

Query tools allow organizations to interact with the data warehouse system

There are four different types of query tools:

- Query and reporting tools

- Application Development tools

- Data mining tools

- OLAP tools

Data warehouse bus

The data warehouse bus determines data flow in the data warehouse, consisting of Inflow, Outflow, Upflow, Downflow, and Metaflow. When building a data bus, it is required to consider the shared dimensions and facts between data marts thoroughly.

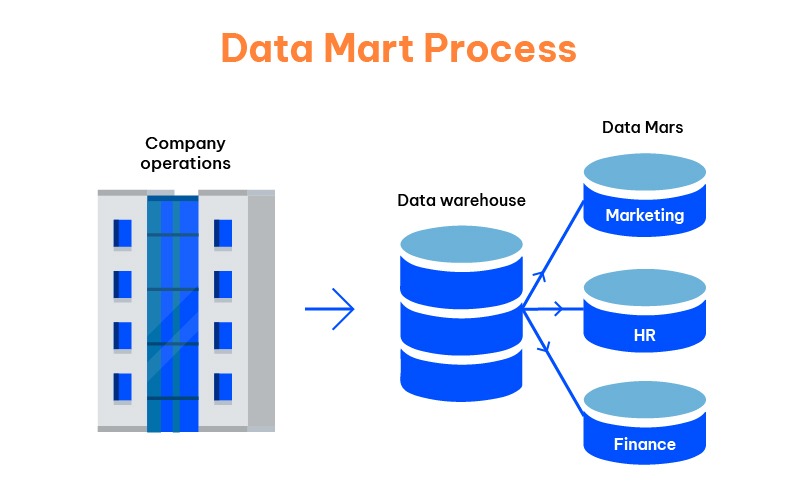

Data mart

A data mart is a subset of a data warehouse focused on a specific business area, such as sales, marketing, finance, or HR. Typically managed by a single department, it is used by specific teams within an organization. Unlike data warehouses, data marts are smaller and more flexible, sourcing data from fewer inputs.

There are five fundamental steps to implementing a data mart.

Step 1: Designing

- Collecting the technical as well as commercial needs and locating data sources.

- Choosing a suitable data subset.

- Establishing the data mart’s logical and physical structure.

Step 2: Constructing

Deploy the actual database that was established in the earlier stage.

For example, objects used in database schemas, such as tables, indexes, views, etc.

Step 3: Populating

- Drawing data to target data mapping

- Deriving the source of data

- Cleansing and converting the data

- Bumping data into the data mart

- Building and archiving metadata

Step 4: Accessing

The access step needs to perform the following tasks:

- Launch meta layer to convert database structures and objects’ names into business terms -> Assist non-technical users working in Data marts easily.

- Build up and cultivate database structures.

- Establish APIs and interfaces if needed.

Step 5: Managing

The Data Mart Implementation process ends with this step. This step includes management duties like

- Managing continuous user access

- Optimizing and adjusting system performance

- Loading and controlling fresh data into the Data marts successively

- Preparing recovery methods and guaranteeing the system’s availability if the system fails.

Data warehouse reporting layer

Through the reporting layer of the data warehouse, end users have access to the BI interface or database architecture. The data warehouse’s reporting layer serves as a dashboard for data visualization, report creation, and extraction.

A data warehouse only retrieves data in a unified format. Hence, it is essential to use an optimal tool like the ETL tool to convert raw data from multiple sources into appropriate data formats. ETL tools can boost the speed and efficiency of extracting, transforming, and loading a huge amount of data into the data warehouse while guaranteeing the high quality of the data. Make your digital transformation seamless and future-ready Accelerate your business growth with zero-disruption modernization services. Maximize the value of your current infrastructure, streamline processes, and cut expenses.

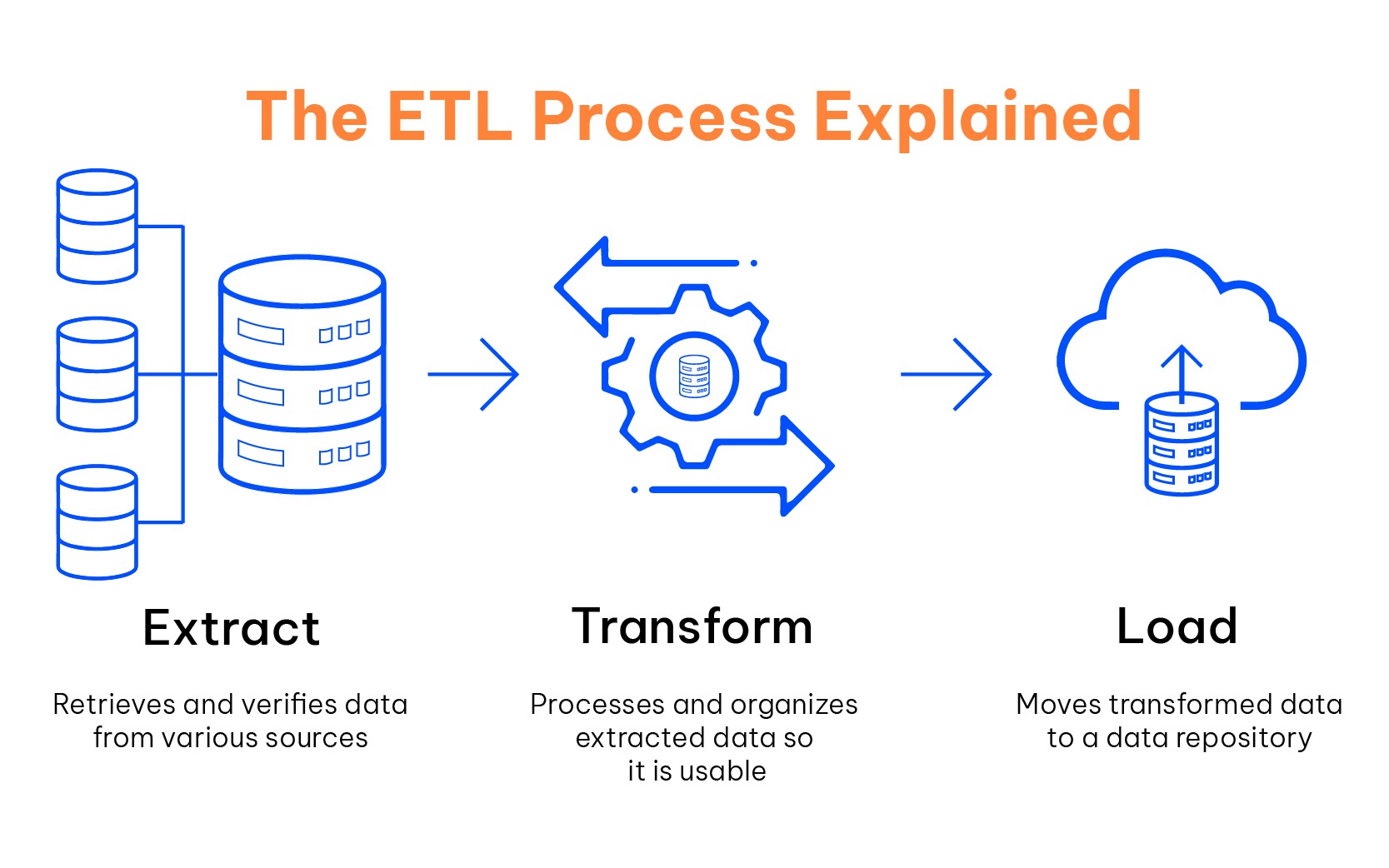

ETL (Extract, Transform, and Load) in the Data Warehouse

So what is the role of the ETL process in a data warehouse? How is it employed to streamline processes and enhance efficiency?

Definition

ETL stands for Extract, Transform, and Load, which are three steps in blending data from different sources into a unified view for future work.

According to Google Cloud, ETL is “the end-to-end process by which a company takes its full breadth of data—structured and unstructured and managed by any number of teams from anywhere in the world—and gets it to a state where it’s useful for business purposes.”

To think of it simply, you can imagine ETL as a clothes store and the products as data. The first step is to take these clothes from different clothing racks. Afterward, you have to wrap it in a floral pattern or polka dot wrapping paper according to the customer’s requirements. Finally, your task is to deliver the wrapped clothes into the customers’ bags.

How does ETL work?

Undeniably, ETL does not work simply as a clothes store as it requires a better understanding of data and its processes inside. As mentioned below, ETL plays the role of data integration and loading it into the data warehouse. ETL involves three stages:

Extraction

The first step of the ETL process is the extraction of data. The main function of this phase is to derive data from various sources as follows:

- Legacy databases and storage.

- Customer relationship management systems (CRM).

- Enterprise resource planning systems.

- Sensor data from the Internet of Things (IoT).

- Sales and marketing applications.

- Customer transaction data.

- Social media and other online sources.

The data sources could come from various formats, such as structured data (Data is available for actual extraction), unstructured data (Data needs some transformations like removing the whitespaces or emoticons before extraction), or even existing databases, CRM systems, Cloud, and data warehouses.

Three approaches to extracting data:

- Notifications-based data extraction: It is one of the easiest ways to derive data. Specifically, some data sources send notifications to the ETL system whenever a data change occurs. The extraction phase of ETL only needs to update the new data.

- Full Extraction: Some sources can detect the changes in the data, so ETL has to extract the entire data from the source. This extraction approach requires you to retain a copy of the last extract, which helps to compare it with the new copy. Because of high data transfer volumes, it is preferable to use the full extraction method for small tables.

- Partial Extraction: Unlike notifications-based data extraction, some sources do not notify the data changes but have a record of which data changed. Regularly, the ETL system assesses the sources to identify if there are any changes and progressively extracts the changed data. The pitfall of the extraction phase is that the ETL system can not diagnose the deleted records in the source data.

Transformation

Frequently, the data is unavailable for delivery to the data warehouse in its primary format. Hence, there are several sub-steps to processing raw data:

- Clean: it helps to remove irrelevant data and fix inconsistent or missing values.

- Standardize: it converts the data under different formats into a common form.

- De-duplicate: it eliminates redundant and repetitive data.

- Verify: it verifies the accuracy of the data. The ETL system locates and highlights irregular data in this step.

- Sort: it organizes the data according to types.

The data conversion needs no certain rules; this is called direct movement or pass-through data. However, in some cases, several conditions must be applied based on the requirement to ensure data quality and availability.

Load

The final step in the ETL process is the loading phase. At this stage, the transformed data is loaded into the new target location, the data warehouse. The data format in the target designation may be a text file, excel file, JSON file, XML file, or database.

There are two methods to load the transformed data:

- Full Load: The full load drives all transformed data into new, unique records in the data warehouse or repository as a single batch. This method is simpler but more time-consuming than incremental loading.

- Incremental Load: This loading approach distinguishes incoming data from what’s already on hand. The ETL system only adds new records if unique data is found. Although the incremental load is more manageable, data inconsistency may happen if there is a system failure. To decide the right load strategy, it is essential to consider many factors, including customized requirements and the characteristics of data destinations (speed, capacity, data interfaces).

Some common requirements are as below:

- Set up a layer for business intelligence and analytics over the data.

- Exploit data as a searchable database.

- Create a training model for a machine learning algorithm.

- Build up an alert system from the data.

Different types of ETL Tools

ETL tools are software applications to facilitate the ETL process, from extracting raw data, transforming it into a standard format, and loading this information into data warehouses. These tools help to facilitate data management systems and ensure data quality.

According to Hub Spot, there are four types of ETL tools defined based on the infrastructure and support organizations or vendors provide:

According to Hub Spot, there are four types of ETL tools defined based on the infrastructure and support organizations or vendors provide.

Enterprise software ETL tools

Commercial organizations develop and sell enterprise software ETL products. Since these businesses were the first to advocate for ETL tools, their solutions tend to be the most reliable and developed across the industries. These tools offer graphical user interfaces (GUIs) for designing and executing ETL pipelines. Additionally, it facilitates relational and non-relational databases such as JSON and XML, event streaming sources, and so on.

Some enterprise software ETL tools are as below:

- Informatica PowerCenter.

- IBM InfoSphere DataStage.

- Oracle Data Integrator (ODI).

- Microsoft SQL Server Integration Services (SSIS).

- Ab Initio.

- SAP Data Services.

- SAS Data Manager.

Open-source ETL tools

The growth of the open-source movement extends the capabilities, speed, and advancement of open-source ETL tools. Many open-source ETL tools are available freely and practically with the GUIs offered. It is used to design data-sharing processes and navigate information flow.

This ETL tool has both advantages and disadvantages. A benefit of open-source solutions is that the source code is accessible for organizations to explore the infrastructure of tools and expand capabilities. Nevertheless, a drawback of this tool is inconsistency, as commercial organizations don’t usually support it.

Some open-source ETL tools are:

- Talend Open Studio.

- Pentaho Data Integration (PDI).

- Hadoop.

Cloud-based ETL tools

Many cloud service providers (CSPs), such as Amazon AWS, Google Cloud Platform, and Microsoft Azure, have developed ETL tools on their own infrastructure. It has resulted from the proliferation of cloud computing and integration-platform-as-a-service.

Like open-source ETL tools, it has favorable and unfavorable influences. In terms of advantages, cloud-based ETL tools are latent, efficient, and elastic. Computer resources will expand to accommodate the current needs for data processing. In addition, the pipeline is further optimized if the enterprise archives its data in the same cloud service provider.

On the contrary, a shortcoming of cloud-based ETL tools is that they are highly proprietary and only available for a specific cloud vendor’s platform. The organization can not use data in different cloud storage and move them to their own data centers.

Some examples of cloud-based ETL tools are:

- AWS EMR.

- AWS Glue.

- Azure Data Factory.

- Google Cloud Dataflow.

Custom ETL tools

Many businesses create their own ETL solutions using general-purpose programming languages. This method is highly flexible and can generate an appropriate solution depending on the organization’s needs and workflow. By contrast, the drawback of this tool is that it requires the most effort and expense to launch and operate.

Popular programming languages to build their own ETL tools:

- SQL.

- Python.

- Java.

For more detailed insights into these four types of ETL tools, read our in-depth analysis here!

Conclusion

A data warehouse is a destination for storing data in a unified format. However, there is a need for the ETL process to derive data from many sources, convert it, and finally, load it into the data warehouse. With the advancement of Big Data, ETL has become more prominent in every industry.

Increasingly, the ETL environment has transformed greatly due to the high requirements of business initiatives and imperatives. According to Deloitte, future ETL will concentrate more on the data streams than the tools. Organizations are advised to put real-time latency, schema progression, source center, and constant integration into consideration. In prospect, the utilization of ETL will be widespread as it has become the ultimate solution for data integration, governance, quality, and security. Imagine What You Could Achieve with AI Streamlining Your Operations Transform your operations with precise, AI-driven workflows. From reducing errors to improving speed, achieve operational excellence and drive sustainable growth.

Your data can become even more helpful – Here’s how

With hands-on experience in all phases of the data pipeline, GEM’s comprehensive list of data services ensures your data is seamlessly integrated and readily available for critical business decisions.

We’re here to elevate the value you gain from this significant asset. Let’s talk!