Contents

The ETL (extract, transform, load) process is not mandatory for data integration and governance, but it remains one of the most effective methods for extracting data from various sources, transforming it into a usable format, and loading it into a data warehouse. With the rise of big data and the increasing demand for business intelligence, numerous ETL tools have emerged. This blog will compare popular ETL tools like AWS Glue, Pentaho, and Talend, discussing their pros and cons and showcasing practical use cases for each.

What are ETL tools?

Extract-Transform-Load (ETL) tools are specialized tools for extracting data from multiple sources, cleansing, transforming, customizing, and importing it to a data warehouse.

The ETL software market, valued at approximately $3.1 billion in 2019, is projected to grow to $10.3 billion by 2031. This represents a compound annual growth rate (CAGR) of 14.3% over the forecast period from 2024 to 2031. The significant market enlargement, together with rapid developments in technologies such as Big Data, the Internet of Things (IoT), and Cloud Computing, will drive even stronger advancements in ETL tools and solutions.

Recently, many organizations have taken advantage of ETL tools to manage big data sources’ volume, variety, and velocity. According to Talend, more than 50% of enterprise data is allocated to the cloud system, emphasizing the influence of external data sources in every company. Hence, it is urgent to implement modern tools to efficiently process and integrate data by entering data warehouse space and accommodating workloads.

How to evaluate ETL tools?

Currently, there are various technology providers, such as AWS, IBM, Oracle, Talend, etc., offering ETL solutions. However, each enterprise has to decide which ETL tools are most efficient and match their operations. A standard framework was conducted based on academic research to compare ETL tools against each other after referencing different articles, journals, and books. There are four categories in the finalized framework as below:

- Price

- Functionality

- Ease of use

- Architecture

Price

The first category is “Price”. The Oracle expert Abramson rated costs as one of the most important criteria for assessing an ETL tool. This criterion involves several fundamental “costs” that the organizations have to take into account:

- License costs

- Operating costs

- Support costs – which occur when you need additional service support.

- Hardware cost – the cost of buying the hardware needed to run the program

Functionality

As a criterion for evaluating ETL tools, “Functionality” focuses on checking whether the tool can process its data.

It relates to a dozen of functionalities, namely:

- Basic processing support

- Performance

- Transformations

- Cleansing

- On-demand support

- Secure Packages

- ETL reporting

- Scheduling

- Metadata

- Rollback

- Connectivity

- Calculation

- Data Warehouse support

- Aggregation

- Reorganization.

Ease of use

This criterion evaluates the usability of ETL tools. Since user preferences vary, it is challenging to set a universal set of standards in this aspect. However, we can agree on the following criteria.

- Completeness of the GUI (Graphical user interface) with a good visual interface

- Customizable code to allow users to enter or customize source code

- An integrated toolset to make them accessible to users and allow for the purchase of additional tools

- Debugging support, which allows users to set breakpoints in the process to examine data and workflow to quickly fix errors

- Source control, which allows users to select, manage, and integrate multiple data sources seamlessly, ensuring efficient and cohesive data extraction, transformation, and loading.

Architecture

The criterion “Architecture” refers to the hardware and operating system (OS) specifications supported by the software, including platform compatibility, backup capabilities, and performance metrics.

- Platform compatibility: The ability of the ETL tool to operate across various hardware and OS environments.

- Backup capabilities: The effectiveness and reliability of the tool’s backup features to ensure data safety and recovery.

- Performance metrics: The efficiency and speed of the ETL processes, including data throughput and resource utilization.

4 types of ETL tools

Recently, many ETL tools have been transformed and developed to satisfy various needs and requirements of users. These appliances fall into different categories based on several criteria such as functionality, structure, volume performance, etc. According to Hubspot, there are four basic types of ETL tools, including Open-source, Cloud-based, Enterprise, and Custom ETL tools. Find the AI Solution That Fits Your Business Perfectly Confused about the right AI approach? Let us help you uncover a solution tailored to your goals and challenges.

Open-source ETL tools

Open-source tools are freely available and created by software developers. Each tool has distinct characteristics in terms of quality, integration, ease of use, adoption, and availability of support. Recently, there have been many open-source options for organizations to take into account, namely Pentaho, Hadoop, Hevo Data, Airbyte, etc. One popular example of open-source ETL tools is Pentaho.

Pentaho

Pentaho Kettle Enterprise, also known as Pentaho Data Integration, is a platform’s ETL model. Accordingly, it allows data to be extracted from numerous sources, transformed, and loaded into the Enterprise Data Warehouse (EDW), either a relational database or a NoSQL database. The organization could utilize the Pentaho tool for further transformation from EDW to Data Marts or Analytic Databases.

The table below indicates a comparison between Pentaho and other ETL tools:

| Feature | Pentaho Kettle Enterprise | Informatica Power Center | Microsoft SSIS | Talend Integration Suite | IBM Data Stage | Clover ETL |

| Easy to use | Yes | No | No | No | No | No |

| Web-based UI | No | Yes | No | No | Yes | No |

| Multi-role Team collaboration | No | No | No | No | No | No |

| Large volume Performance | Yes | Yes | Yes | Yes | Yes | Yes |

| Data partitioning | No | Yes | No | Yes | Yes | Yes |

| Complex transformation | Yes | Yes | Yes | Yes | Yes | Yes |

| Plug-in external programs | No | Yes | No | Yes | Yes | No |

| Data validations | Yes | Yes | Yes | Yes | Yes | Yes |

Case study – GEM’s Client ETL project

Background: GEM assisted a major client in the Telecommunication sector with their ETL and EL (Extract and Load) processes. The client required frequent data updates—daily, hourly, or even by the minute—to support their business operation.

Problem: Managing the vast and diverse data sources daily was a significant challenge. Pentaho was chosen as a suitable solution to meet the client’s demands and GEM utilized ETL and EL processes to help the client retrieve and manage massive amounts of data.

Solutions

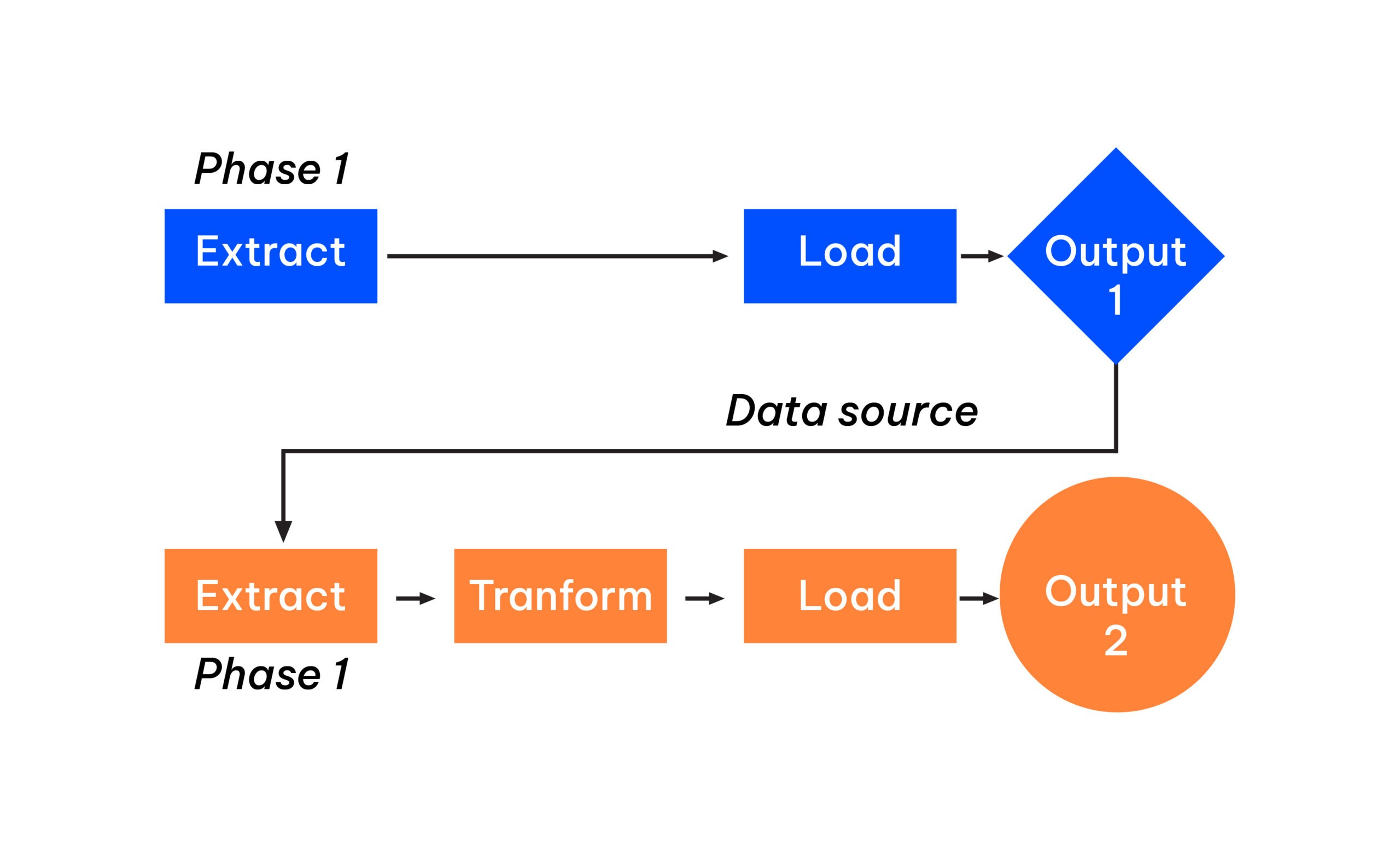

This work comprises two phases: phase 1 – EL process and phase 2 – ETL process, as shown in the map below. Throughout both phases, the GEM team leveraged the Pentaho tool to run EL and ETL processes.

- Phase 1: Raw data was extracted from the data source provided by the client, encompassing various types (structured, unstructured, etc.). The GEM team then loaded all the extracted data into a data warehouse. The loading step delivered customized Output 1 according to the client’s requirements, which became the data source for Phase 2.

- Phase 2: GEM continually drew out data from Output 1. The next step involved storing all the data from the previous step, regardless of the type. Subsequently, the data was transformed according to the client’s needs. The final result was Output 2, which consisted of data marts or data lakes. The converted data then served as material for data visualization or reporting.

Results: GEM helped the client standardize the data processing and governance to unlock effective data-driven decision-making and predictions.

Cloud-based ETL tool

Many cloud service providers (CSPs), such as Amazon AWS, Google Cloud Platform, and Microsoft Azure, have developed ETL tools on their own infrastructure. It has resulted from the proliferation of cloud computing and integration-platform-as-a-service. Currently, AWS has taken the largest market share among various cloud-based ETL tools.

AWS Glue

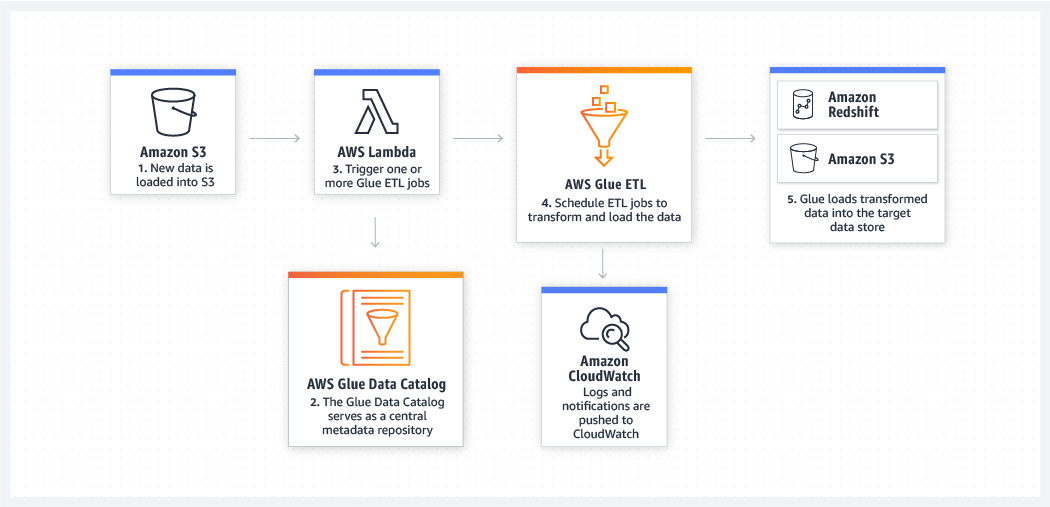

AWS is a prevalent cloud-based ETL tool that assists visual and code-based clients, which would deliver sound business intelligence. The serverless platform provides multiple features, such as the AWS Glue Data Catalog for detecting data from various organizations and the AWS Glue Studio for visually arranging, performing, and managing ETL pipelines.

The map below is an illustration of how to create, run, and assess the ETL process without writing code, thanks to AWS Glue Studio. Initially, you just compose ETL tasks to move and transform data with the drag-and-drop editing tool. Then AWS Glue will automatically develop code for your tasks. Additionally, the task runtime console of AWS Glue Studio allows you to manage ETL execution and track the progress.

Case study – Burt Corporation

Burt Corporation, a start-up data company, delivers data intelligence and analytics solutions to many major online publishers, which helps to understand and optimize the clients’ online marketing strategies. The company has implemented various tools such as AWS Glue, Amazon Redshift, and Amazon Athena to foster efficient data collection, processing, analysis, and decision-making capabilities.

Commercial ETL tool (Enterprise)

Commercial tools distinguish themselves from the other types with two notable features: Modification and Data inputs.

- Modification: While open-source software allows basic rights to the general public, commercial tools are modified by only the organization that created them.

- Data input: Unlike cloud-based tools that only process online data sources, commercial ones accept sources from online and offline databases.

Commercial tools offer graphical user interfaces (GUIs) for designing and executing ETL pipelines. It also facilitates relational and non-relational databases such as JSON and XML, event streaming sources, etc.

The next section will investigate Informatica PowerCenter as an example for a better understanding of commercial ETL tools.

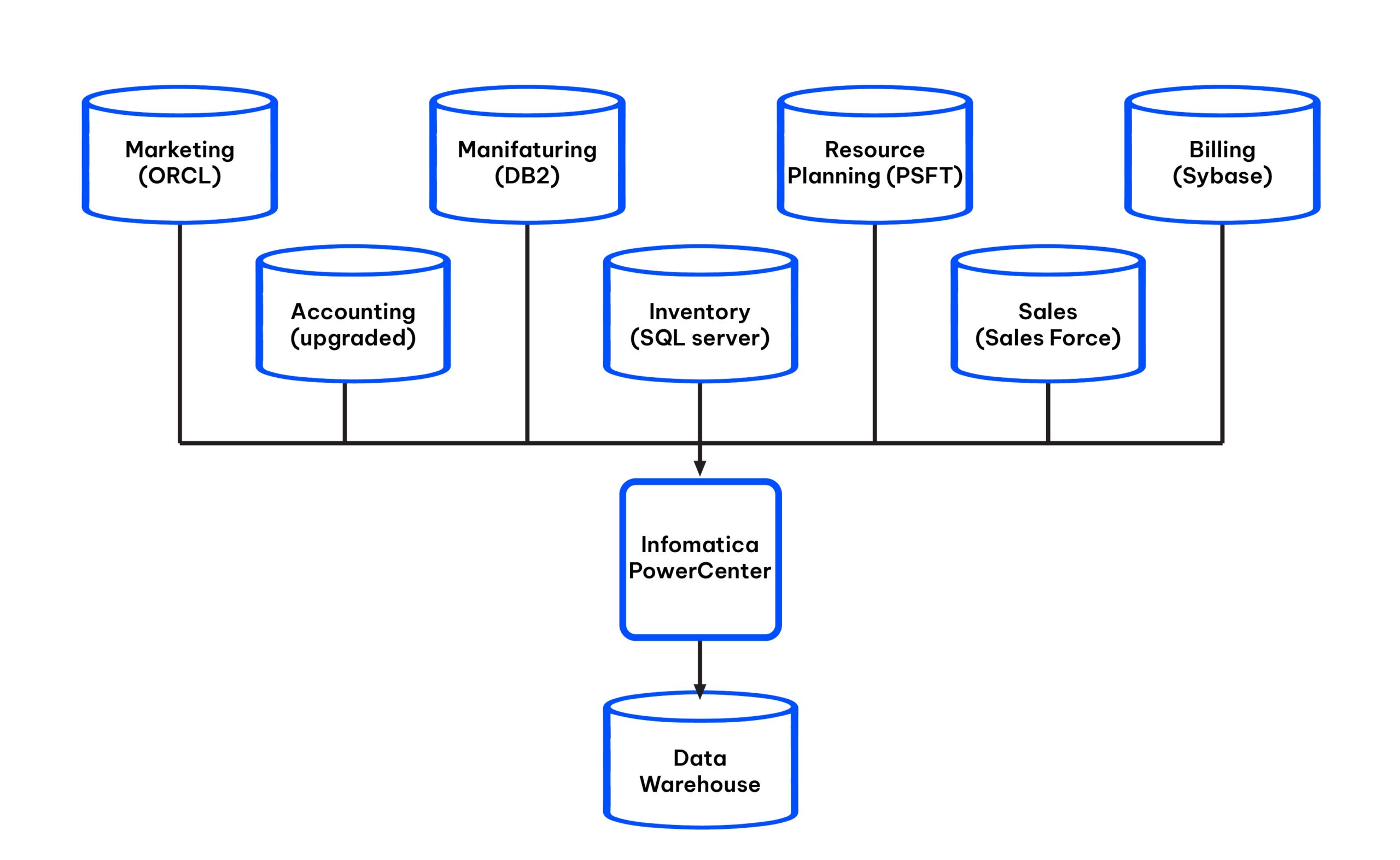

Informatica PowerCenter

Currently, Informatica is an industry leader in ETL. It has the best-in-class data integration products for quickly integrating data and applications.

Informatica PowerCenter is an on-premise ETL tool that can link to a number of different legacy database systems. The tool also allows for data governance, monitoring, master data management, and masking. Users can view servers on the company’s premises using ETL, a batch-based ETL application with a cloud counterpart. It also provides a number of data management and software-as-a-service options.

Informatica is an ETL tool that can be used to build corporate data warehouses. It also provides a range of data masking, duplicate data, merger, consistency, and ETL products. The ETL tool allows users to connect to and view data from a variety of sources, as well as perform data processing.

| Pros | Cons |

| – Easier for server manager to schedule – Informatica’s archive manager can help with data preservation and recovery – Informatica is a mature, eminent business data integration framework |

– Informatica is a bit expensive. It is costlier than Datastage but cheaper than Ab Initio. – To use Informatica’s services, one must pay the single and multi-user licensing costs – Only available on a commercial basis. – Informatica’s custom code incorporation through Java conversion is relatively complicated |

Case study – Radobank

Rabobank is a multinational banking and financial services company. The organization is a global leader in food and agricultural financing and sustainability-oriented banking, with a mission to grow a better world together.

Goals

- Understand data lineage across the bank to create business value and growth opportunities while driving digital transformation

- Comply with regulatory requirements for financial services, such as BCBS 239 for risk data aggregation and reporting

- Promote data governance and improve overall data quality to help increase the value of data assets and maintain that value over time

Solutions

“Informatica Enterprise Data Catalog helps us increase and promote the value of our data assets, which are the foundation of everything we do.”, Anil Bandarupalli – Solution Architect for Data Management Rabobank said.

- Inventory and catalog data using Informatica Enterprise Data Catalog for end-to-end data lineage and complete tracking of data movement

- Give employees an easy-to-use interface and simple search to quickly discover data and its lineage for auditors or internal projects

- Reduce the duration of root cause analysis processes to empower data stewards to identify and remediate data quality issues faster

Custom ETL tool

Despite the widespread use of graphical user interface (GUI)-based solutions, some organizations choose hand-coded ETL tools for their ETL processes. In some contexts, the custom approach could be cheaper, faster, and more attainable than GUI-based tools.

Enterprises would build their custom ETL tools based on programming languages such as Python, R, Java, etc. In academic research, the authors introduced several custom tools, namely Pygrametl and Petl (Python-based), Scriptella (Java-based), etl (R-based).

Petl

The most notable example is Petl, a general-purpose Python library that can carry out typical ETL processes, supported by the MIT License. PETL which is used for extracting, transforming, and loading tables of data.

The design of Python ETL (petl) is easy and convenient for users. Hence, the tool is preferable for working with mixed, unfamiliar, or heterogeneous data. In addition, you can build tables in Python from various data sources such as CSV, XLS, HTML, TXT, JSON, etc, and drive them to the prescribed data storage. Another benefit of using Petl is that it can be used for migrating between SQL databases efficiently and smoothly.

Closing remark

ETL tools are of immense importance in the field of business intelligence. Using a proper ETL tool might drastically affect the business outcome. Hence, it is important to choose the right ETL tool according to business requirements and investments. This blog presented a review of distinct features of some mainline ETL Tool suites and how these tools are applied in reality. In the future, the market of ETL tools will expand significantly due to the demand for data integration and governance. More importantly, these tools have always been transformed and upgraded, which requires humans to learn and adapt to changed things. Stop trying to find the dots. Let us help you connect them! With GEM as your ServiceNow Partner, accelerate delivery, reduce complexity, and gain a competitive edge in today’s fast-changing market.

Elevate your data strategy today

Unlock the full potential of your data with GEM Corporation’s comprehensive data services. From ETL tools to advanced analytics, our expertise ensures your data processes are optimized for success.

Let GEM know how we can reach you via the form below and let’s turn data into a strategic asset!