Contents

Manual testing remains a core discipline in software quality assurance, particularly where human judgment and real-user behavior must be assessed. While automation continues to expand, many teams rely on manual approaches for functional, interface, and exploratory validations. According to Testlio, over two-thirds of QA teams still apply manual methods in their testing cycles. This article explains 12 types of testing in manual testing, each applied at different stages of the development lifecycle. From unit-level checks to system-wide evaluations, these techniques reflect the range of conditions software must meet before release.

Why Manual Testing Remains Irreplaceable

While automated testing brings speed and consistency, manual testing provides depth in areas where tools fall short. It plays a key role in verifying software behavior when human judgment, adaptability, and context are required.

- Contextual Understanding

Manual testers evaluate software with a full view of business processes, user roles, and edge cases. They can assess whether the system behaves as expected under real-world conditions, including non-standard inputs or unusual navigation patterns.

- Adaptability During Change

In fast-moving development cycles or early-stage products, requirements often shift. Manual testing adapts quickly without the lead time needed to adjust automated scripts. This flexibility supports frequent iterations and evolving functionality.

- Exploratory and Ad Hoc Scenarios

Exploratory testing relies on the tester’s intuition and experience to uncover hidden defects. These scenarios are not pre-defined, making them difficult to automate. Manual testers can follow unexpected paths and uncover issues that structured scripts may overlook.

- Subjective Evaluation

Certain aspects of software, such as UI clarity, content accuracy, or overall usability, require human assessment. Manual testing helps teams catch inconsistencies, layout issues, or confusing interactions that affect user experience.

- Support for Compliance and Risk Mitigation

In sectors with regulatory or legal requirements, manual testing helps verify documentation, workflows, and user interactions for compliance. These areas often require detailed reviews that go beyond functional correctness.

Manual testing is not a substitute for automation, but a complementary practice that addresses quality dimensions automation cannot.

12 Types of Testing in Manual Testing

Manual testing spans a range of techniques, each applied based on visibility into the internal structure of the software. In this section, we’ll explain thoroughly the different types of testing in manual testing.

Black box testing

Black box testing focuses on validating functionality from an external perspective, without insight into the internal code or logic. Testers evaluate inputs and outputs based on requirements and user expectations. Common black box testing techniques include:

- Equivalence Partitioning: Dividing input data into valid and invalid partitions to test representative values.

- Boundary Value Analysis: Testing at the edges of input ranges, where defects often occur.

- Decision Table Testing: Using input combinations and corresponding outputs to verify rules and conditions.

- State Transition Testing: Verifying system behavior as it transitions between different states based on input events.

- Error Guessing: Drawing on past experience to identify failure-prone areas likely to produce defects.

Black box testing is often applied in functional, system, and acceptance testing phases.

White box testing

White box testing is performed with full access to the internal logic and code structure. It’s typically conducted by developers or testers with coding knowledge, focusing on how the system works behind the scenes. Key white box testing methods include:

- Statement Testing: Verifying that every line of code is executed at least once.

- Branch Testing: Testing all possible branches in control structures (e.g., if/else).

- Path Testing: Executing all possible paths in the code to detect logic errors.

- Loop Testing: Assessing the behavior of code loops under various conditions, including zero, one, and multiple iterations.

- Condition Testing: Evaluating logical conditions for true and false outcomes.

White box testing strengthens code reliability and is often used during unit and integration testing.

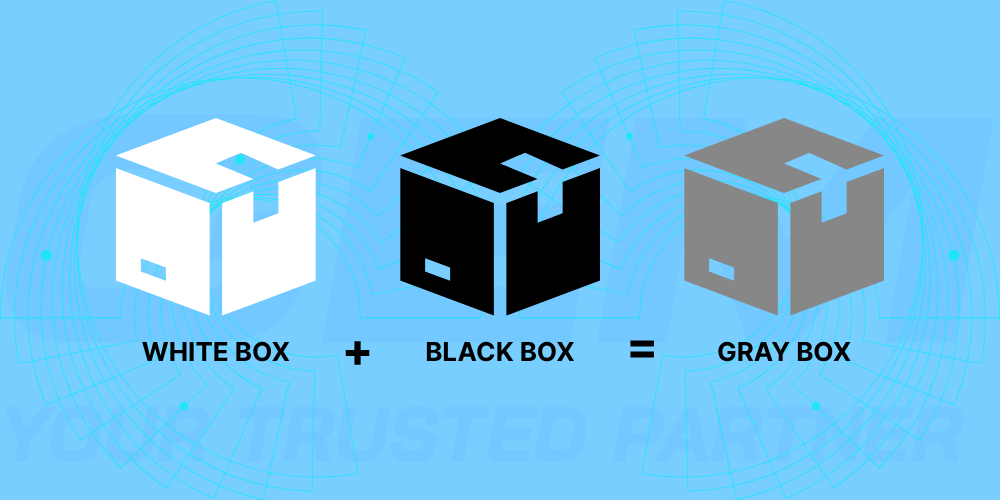

Gray box testing

Gray box testing combines elements of both black and white box testing. Testers have partial knowledge of internal components, allowing them to design more informed test scenarios while maintaining a user-centric approach. Common gray box testing practices include:

- Session-Based Testing: Structuring exploratory tests into time-boxed sessions guided by known system behavior.

- Database Testing: Verifying data integrity, table relationships, and query accuracy with awareness of the backend structure.

- Access Control Tests: Assessing how authorization and authentication mechanisms behave under different user roles.

- Interface Testing: Validating interactions between integrated modules from both the input/output and internal perspective.

This hybrid method is typically used in integration, security, and end-to-end testing, where insight into architecture improves test coverage without requiring full code access.

Unit testing

Unit testing focuses on verifying individual components or functions in isolation. Typically written and executed by developers, these tests confirm that specific code units behave as expected under defined conditions. Key elements of unit testing include:

- Function-Level Validation: Each function or method is tested with both valid and invalid input.

- Mocking External Dependencies: External calls (e.g., APIs, databases) are simulated to isolate the unit under test.

- Assertion-Based Checks: Output values are compared with expected results using assertions.

- Code Coverage Measurement: Tools are often used to track how much of the codebase is exercised through tests.

Unit testing supports early defect identification and contributes to code stability during development.

Sanity testing

Sanity testing is a focused testing effort conducted after receiving a new build or a minor update. It helps testers determine whether the specific changes or bug fixes were applied correctly and did not affect related functionality. Characteristics of sanity testing include:

- Targeted Scope: Only the modified modules and related areas are tested.

- Quick Execution: Tests are run without detailed documentation or comprehensive planning.

- Change Validation: Verifies that reported defects are resolved or that new features are functioning as described.

- Short Feedback Cycle: Helps QA teams decide if further testing is feasible with the current build.

Sanity testing is commonly used when time is limited and the scope of change is narrow.

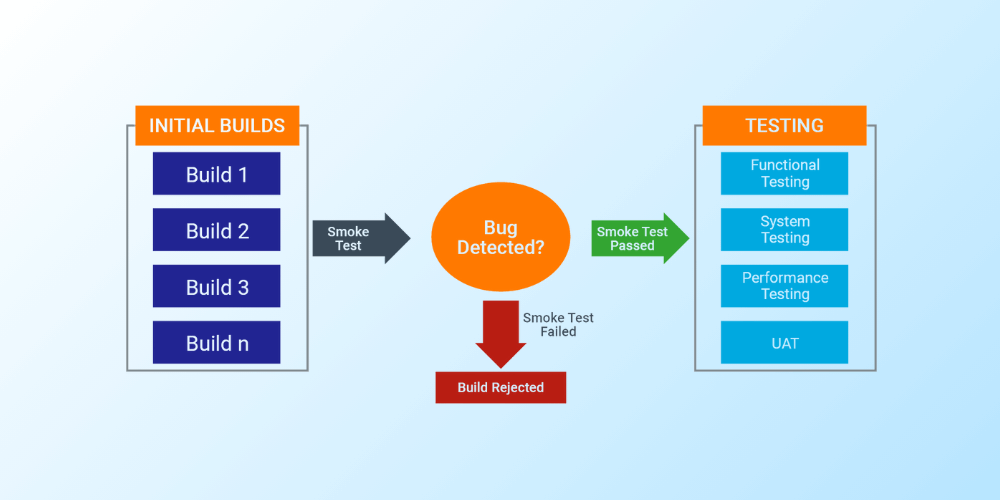

Smoke testing

Smoke testing is a preliminary check performed on a new build to confirm that the core functionalities of the application are working. It acts as a gatekeeping step before more in-depth testing begins. Typical aspects of smoke testing include:

- Basic Functionality Checks: Validates core workflows such as login, navigation, and data input.

- Build Verification: Confirms that the application launches, responds, and performs basic tasks.

- Minimal Test Set: A small number of high-level test cases are executed to assess the stability of the build.

- Early Defect Detection: Any major failure at this stage signals that the build is not ready for detailed testing.

Smoke testing helps QA teams avoid investing time in builds that are not ready for functional validation.

Regression testing

Regression testing involves re-executing test cases after code changes to confirm that existing functionality remains unaffected. It is commonly used after bug fixes, enhancements, or system upgrades. Core practices under regression testing include:

- Re-running Functional Tests: Previously passed test cases are executed again to check for unintended effects.

- Prioritizing High-Risk Areas: Modules with frequent changes or historical defects receive more attention.

- Maintaining a Regression Suite: A curated set of test cases is created and updated over time to reflect current system behavior.

- Scheduling with Each Build: Regression tests are typically run with every new version to support consistent releases.

This testing type prevents reintroduction of known defects and supports product stability across iterations.

UI testing

UI testing examines the application’s user interface to verify that visual elements, layout, and interactions meet design expectations. It also checks that the interface responds correctly to user actions. Manual UI testing focuses on the following:

- Element Visibility and Alignment: Buttons, fields, and labels are reviewed for correct positioning and display.

- Interactive Behavior: Testers validate dropdowns, modals, and form elements under different inputs and screen sizes.

- Content Accuracy: Text, messages, and labels are checked for completeness, grammar, and clarity.

- Cross-Device and Cross-Browser Checks: The interface is assessed on various platforms to confirm consistent behavior.

UI testing supports usability by aligning visual presentation with functional behavior.

Exploratory testing

Exploratory testing is a hands-on approach where testers actively explore the application without predefined test cases. It relies on domain knowledge, experience, and intuition to identify defects that scripted tests may not capture. Key practices include:

- Session-Based Testing: Testing is structured into time-boxed sessions, each with defined goals or areas of focus.

- Real-Time Documentation: Observations and discovered issues are documented as the session progresses.

- Follow-Up Scenarios: Unexpected findings prompt additional, unscripted tests to probe deeper into system behavior.

- Focus on Uncovered Areas: Less-tested modules or new features are often targeted to increase coverage.

Exploratory testing surfaces issues in real-world workflows and complements structured testing by addressing gaps.

System testing

System testing is a full-scale evaluation of the complete and integrated application. It checks the behavior of the entire system against documented requirements, covering both functional and non-functional aspects. This test type is conducted in a controlled environment, often by a dedicated QA team. Key areas typically covered include:

- Functional Testing: Validates that features operate according to specifications.

- Performance Testing: Assesses response time, stability, and scalability under expected load.

- Security Testing: Identifies potential vulnerabilities in authentication, data handling, and access control.

- Compatibility Testing: Verifies system behavior across browsers, devices, or operating systems.

- Error Handling Testing: Confirms the system responds correctly to invalid inputs or system faults.

System testing ensures the application performs consistently as an integrated whole, including modules, interfaces, and external dependencies.

Integration testing

Integration testing focuses on the interaction between system components, verifying that data flows and communication between modules occur as intended. This testing is carried out after unit testing and before system testing. Common integration testing approaches include:

- Top-Down Testing: Starts with high-level modules and integrates lower-level components step by step.

- Bottom-Up Testing: Begins with unit-level modules and progressively integrates higher-level components.

- Big Bang Testing: Combines all components at once and tests the complete system interaction.

- Incremental Testing: Adds and tests modules in phases, making it easier to isolate defects.

- Interface Testing: Focuses on the data exchange and communication between modules, APIs, or services.

Integration testing helps teams detect defects in data mapping, interface communication, and service coordination before full system testing begins.

Acceptance testing

Acceptance testing is the final phase of validation before a product goes live. It confirms whether the system meets business requirements and is ready for use by end users. This testing is usually performed by QA teams, stakeholders, or clients. Common forms of acceptance testing include:

- User Acceptance Testing (UAT): End users execute test cases based on real-world scenarios to verify that the system supports their workflows.

- Business Acceptance Testing (BAT): Focuses on validating business rules, compliance needs, and process alignment.

- Contract Acceptance Testing: Confirms that the delivered product meets the agreed terms and specifications.

- Regulation Acceptance Testing: Applies in regulated industries to validate adherence to legal or policy standards.

Acceptance testing helps stakeholders confirm that the product is ready for deployment and aligns with the intended use.

With these testing types, QA teams can validate both the functional and experiential dimensions of software.

How to Perform Manual Testing Correctly and Effectively?

Manual testing requires a methodical approach to deliver consistent results across development cycles. A structured workflow supports traceability, accountability, and alignment with product goals.

Requirements

Begin by reviewing product requirements, user stories, and technical specifications. The objective is to understand what needs to be tested and under what conditions. Key actions at this stage include:

- Reviewing functional and non-functional requirements

- Identifying acceptance criteria and business rules

- Clarifying assumptions or constraints with stakeholders

Clear and complete requirements form the foundation for accurate test coverage.

Test plan

The plan outlines how testing will be organized and executed. It also defines the boundaries of the effort. During this phase, teams:

- Outline the features, modules, or workflows in scope

- Choose methods suitable for the application type (e.g., functional, exploratory, compatibility)

- Set milestones for test case creation, execution, and closure

- Assign responsibilities across QA, development, and business stakeholders

- Flag tools and environments required for test execution

This document acts as a coordination tool across roles and timeframes.

Write test cases

Test cases translate requirements into precise actions and expectations. They give structure to the testing effort and help teams trace defects back to specific conditions. Testers typically:

- Break down features into testable units

- Design input combinations that reflect both standard and edge scenarios

- Document preconditions, test data, and environment setup

- Define expected outcomes in measurable terms

- Organize cases into suites by module, risk area, or release cycle

Each case serves as a reference point for execution and future maintenance.

Execute test cases

During test execution, each case is run according to its defined steps. Testers document outcomes and mark each case as passed or failed. Execution involves:

- Running each test case step by step

- Recording the actual outcome for each action

- Comparing results with the expected outcome

- Logging any deviation, inconsistency, or unexpected behavior

- Capturing supporting evidence such as screenshots or console logs

Execution provides insight into current application behavior across different workflows.

Log defects

Defects found during testing are documented in a defect tracking system. The goal is to provide complete and reproducible information to developers. A typical defect report includes:

- A concise summary of the issue

- Steps required to trigger the defect

- Observed behavior and its impact on the system

- Environment details such as browser version, OS, or build number

- Any supporting files (e.g., logs, screenshots, data samples)

This step supports faster triage and resolution by development teams.

Retest and regression test

After a defect is addressed, testers return to the failed case to confirm the fix. They also revisit related areas of the application. This phase includes:

- Re-executing previously failed test cases using the same conditions

- Validating all linked features that may have been affected by the change

- Running a predefined set of regression cases

- Watching for new or repeated issues in previously stable areas

- Updating the test management system with the latest results

This step guards against unintended side effects across the application.

Result reporting

Once execution is complete, results are compiled into a format suitable for stakeholders. Reports typically contain:

- Summary of executed cases, including total counts and outcomes

- List of open, resolved, and deferred defects

- Observations that may require follow-up investigation

- Recommendations based on test outcomes and risk areas

- Traceability between test cases and original requirements

Reporting supports product decisions by providing a full picture of test coverage and system quality.

GEM Corporation is a global technology partner specializing in tailored software development and end-to-end quality assurance. Since 2014, the company has delivered over 300 projects across the EU, Australia, Japan, Korea, and more – serving businesses that require both domain expertise and engineering precision. GEM’s custom software services focus on building scalable, secure applications that align with specific business objectives. On the quality assurance side, GEM provides a full range of manual and automated testing, covering functional, integration, security, and performance layers. The QA team applies both structured test design and exploratory methods to identify risks early and validate complex systems under real-world conditions. With a broad tech stack and a delivery model built for adaptability, GEM supports clients from initial concept through to stable release.

Explore more: Manual testing vs auto testing

Conclusion

Manual testing remains a core part of software validation, especially in scenarios where human judgment, context, and adaptability are required. The different types of testing in manual testing, including black box, white box, integration, system, acceptance, regression, and exploratory, help teams assess both technical accuracy and real-world behavior. Each type plays a role in uncovering defects, verifying workflows, and supporting release stability. Combined with a clear process, manual testing supports consistent delivery across changing requirements. While automation covers repeatable tasks, manual testing continues to provide insight into areas where flexibility and attention to detail are necessary. Its value lies in its focus on logic, usability, and user outcomes.

To learn how GEM can support your next project with tailored QA services or custom software solutions, reach out to our team for consultation.