Contents

- What is the AI Governance Framework?

- Core Pillars of an Effective AI Governance Framework

- Levels of AI Governance

- Why AI Compliance Matters for Enterprises

- Global Regulations Shaping Enterprise AI Governance

- AI Governance in Practice Across Industries

- Building a Responsible AI Governance Framework: A Step-by-Step Approach

- 1. Define Ownership and Governance Structure

- 2. Align with Company Values and Risk Appetite

- 3. Map Current AI Use Cases and Risk Exposure

- 4. Draft Ethical and Operational Guidelines

- 5. Develop an AI Compliance Roadmap

- 6. Select Tools for Model Monitoring and Documentation

- 7. Train Teams and Embed Governance into Workflows

- 8. Review Regularly and Evolve with AI Maturity

- Tools & Technologies That Support AI Governance

- GEM Corporation: Your AI Governance and Enablement Partner

- Conclusion

- What exactly is an AI Governance Framework, and why does my company need one?

- What are the most important components or "pillars" of a responsible AI framework?

- Why is it so important for enterprises to focus on AI compliance right now?

- Which global regulations should my enterprise be aware of when setting up governance?

- What is the first practical step we should take to start building this framework?

Enterprises are moving fast with AI, but without structure, things can get messy, fast. That’s where an AI Governance Framework comes in. It sets the direction for how teams manage risk, align with regulations, and keep AI projects in check. In this guide, we’ll walk through the key components of a solid framework, how to build one that fits your organization, and the regulations you need to watch. You’ll also find practical steps and real-world examples to help you move from ideas to action. Let’s start with the core pillars that support responsible AI operations.

What is the AI Governance Framework?

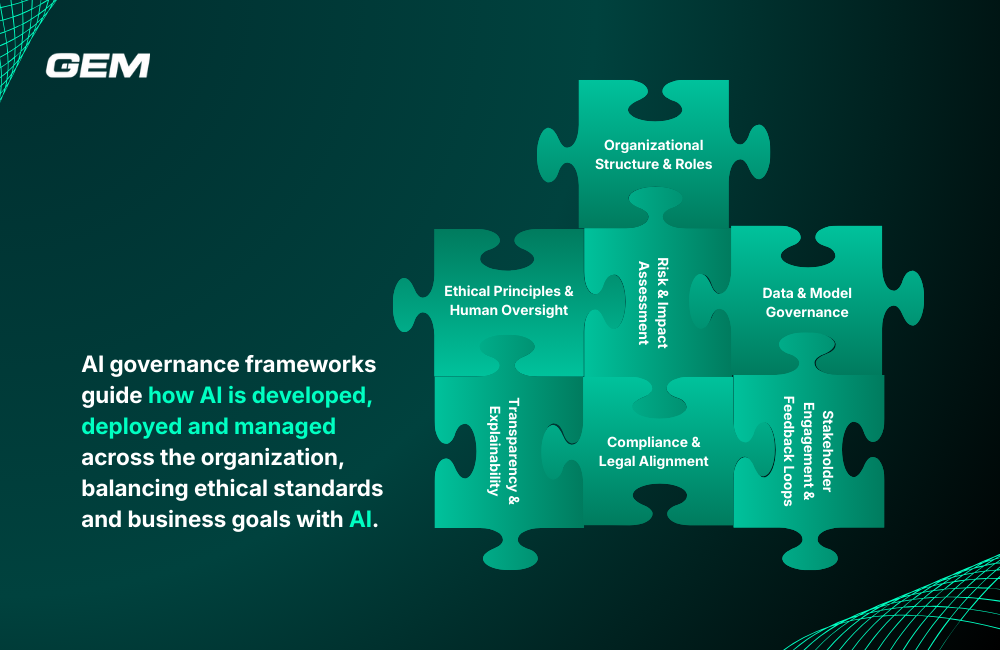

An AI governance framework is a structured approach that guides how AI is developed, deployed, and managed across the organization. It brings together policies, workflows, and accountability measures to make sure AI use aligns with ethical standards, internal goals, and external regulations.

The framework touches on everything from assigning responsibilities to defining how data and models are handled. It also supports transparency, monitors for risks, and helps teams respond to issues early. The most effective frameworks are flexible enough to adapt as AI evolves, while still keeping outcomes predictable and aligned with business priorities.

Core Pillars of an Effective AI Governance Framework

Building a responsible AI program starts with a framework grounded in practical and scalable components. These pillars form the foundation for long-term alignment, trust, and performance.

- Organizational Structure & Roles

Clear ownership is critical. Teams across legal, compliance, data science, IT, and executive leadership need defined responsibilities. A cross-functional setup makes it easier to embed governance directly into AI workflows.

- Ethical Principles & Human Oversight

Governance starts with agreed-upon values. Fairness, transparency, inclusiveness, and accountability guide how decisions are made and where human oversight is required. These principles shape how AI is designed and evaluated.

- Risk & Impact Assessment

This involves identifying and reviewing potential issues early, such as bias, safety, or data misuse. Ongoing risk assessments help teams prioritize what to monitor and where to intervene.

- Data & Model Governance

From training to deployment, every stage of the AI lifecycle needs clear standards. This includes data quality checks, access control, model documentation, and performance monitoring in production.

- Transparency & Explainability

Systems should be designed to support internal review and user understanding. That means making it possible to audit decisions, explain outcomes, and track system behavior, especially in high-stakes use cases.

- Compliance & Legal Alignment

As AI regulations grow more complex, your framework should map AI systems to applicable laws and standards. This includes preparing for audits and keeping documentation ready for review.

- Stakeholder Engagement & Feedback Loops

Governance isn’t just top-down. Input from users, customers, and affected parties can surface risks and improve system design. Build structured channels for feedback and make adjustments based on what you learn.

Watch this video to get a more comprehensive overview of AI governance.

Levels of AI Governance

AI governance doesn’t look the same across every organization. Most businesses fall somewhere along a maturity curve, depending on their AI use, industry requirements, and internal capabilities. These levels help outline where companies are today and where they might go next.

- Informal: Governance at this stage is based on general company values or leadership expectations. There may be informal ethical discussions or review boards in place, but no standardized processes or documentation.

- Ad hoc: Organizations in this category create policies or controls in reaction to specific events or risks. While individual teams may follow some structure, there’s no unified system guiding how AI is built, reviewed, or deployed across the business.

- Formal: A formal governance model includes defined roles, structured risk assessments, and ongoing oversight. It reflects both organizational values and legal requirements, often supported by cross-functional teams and documented procedures.

Maturity in AI governance tends to grow with AI adoption. As more functions rely on AI, structured oversight becomes harder to postpone and more valuable to embed early.

Why AI Compliance Matters for Enterprises

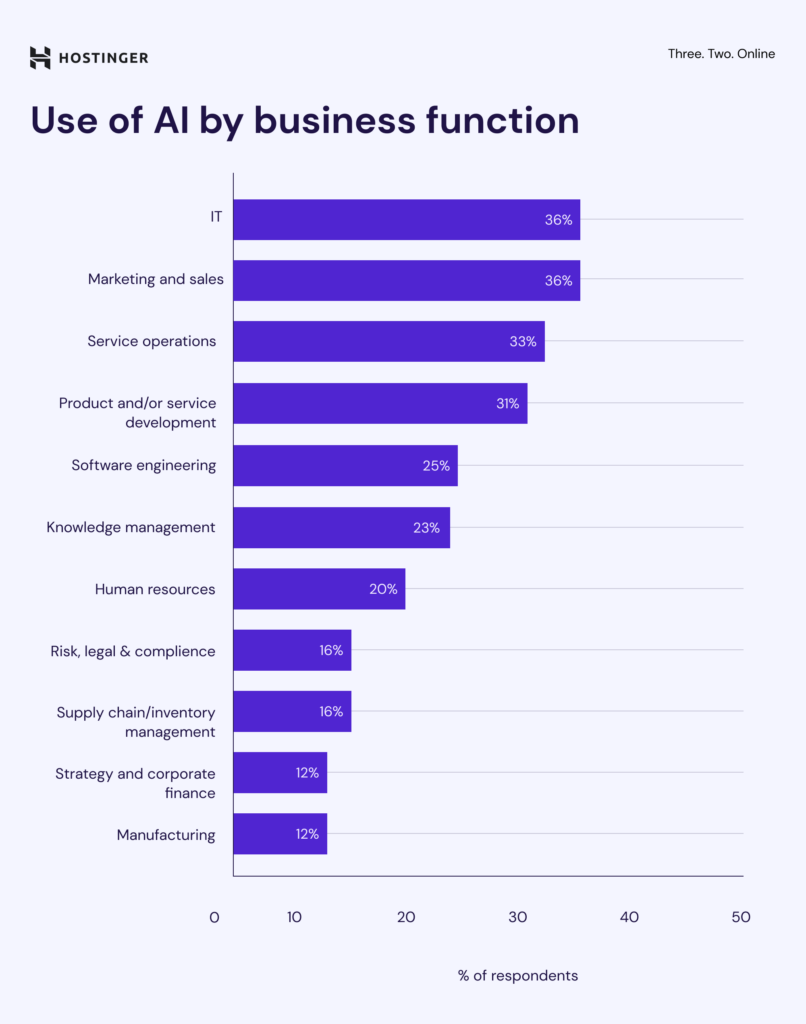

Source: McKinsey

According to McKinsey’s latest survey, 88% of respondents say their organizations now use AI regularly in at least one business function, up from 78% the year before. With AI touching more workflows, the stakes are higher for getting it right.

Responsible AI use extends to building systems that perform well, adapt quickly, and earn trust. As AI adoption grows, so does the need for clear governance and compliance.

Here’s why compliance matters:

- Reduces legal and reputational risk: Clear governance policies help teams flag and address issues early, limiting exposure to lawsuits, regulatory penalties, or public backlash.

- Improves operational clarity and efficiency: Having defined processes reduces uncertainty, speeds up decision-making, and makes it easier to scale AI systems with fewer setbacks.

- Builds trust across stakeholders: When customers, employees, and partners see that AI is managed responsibly, their confidence grows. That trust is key to long-term adoption and brand credibility.

- Supports faster, safer deployment at scale: A strong framework removes guesswork. Teams can launch new models more confidently, knowing they’ve met internal and external requirements.

Global Regulations Shaping Enterprise AI Governance

With high AI adoption, regulatory bodies across the world are putting frameworks in place to guide responsible use. Enterprises operating across regions need to stay aware of these evolving requirements, not just for compliance, but to build trust and reduce operational risk.

European Union

- EU AI Act: The first global legal framework for AI, structured around a risk-based approach. It bans harmful applications (such as social scoring) and introduces strict requirements for high-risk systems (like those in healthcare or education), and mandates transparency for generative AI tools.

- GDPR: Sets clear rules for personal data protection, requiring legal grounds for processing and transparency in automated decision-making. It also reinforces the right to explanation for individuals affected by AI-driven outcomes.

North America

- SR-11-7 (U.S.): Issued by the Federal Reserve, this guidance focuses on model risk management in financial institutions. While not AI-specific, it’s widely adopted for managing machine learning and other algorithmic systems used in decision-making.

- Canada’s Directive on Automated Decision-Making: Applies to federal government use of AI. It classifies systems by risk level and requires an Algorithmic Impact Assessment (AIA) to ensure fairness, accuracy, and explainability in automated decisions.

Asia-Pacific

- National Frameworks: Countries like Singapore, Japan, and South Korea have introduced national AI strategies focused on innovation and safety. Common themes include trustworthiness, transparency, and data protection (e.g., Japan’s APPI).

- Cross-Border Initiatives: Japan’s “Data Free Flow with Trust” (DFFT) promotes responsible cross-border data sharing, aiming to balance innovation with privacy protection. It’s gaining traction across the APAC region.

Global Standards

- OECD Principles: Offers non-binding guidelines that promote human-centric and transparent AI. These principles are often used as a reference by governments and industry groups.

- ISO/IEC Standards: ISO/IEC 42001:2023 introduces a certifiable management system for AI, helping organizations align with best practices in risk and compliance.

- NIST AI RMF: A practical, voluntary framework from the U.S. that outlines four key functions – Govern, Map, Measure, and Manage, to guide AI risk management throughout the system lifecycle.

These frameworks and standards are shaping the way enterprises design, deploy, and monitor AI. Staying informed and adaptable is key to maintaining both compliance and trust.

AI Governance in Practice Across Industries

AI adoption is growing rapidly across sectors, but how companies govern these systems varies by industry. As more AI-driven decisions shape operations, risk, and customer interactions, structured governance plays a key role in keeping outcomes aligned and responsible.

Source: Hostinger

- Technology

With 88% of companies using generative AI, the tech sector leads in implementation. Many have already embedded AI ethics boards, model documentation standards, and internal audits into their workflows. Their early start allows them to test and refine governance structures at scale.

- Professional Services

Around 80% of firms in this sector use generative AI to support knowledge work, from legal reviews to consulting deliverables. Governance often focuses on ensuring client confidentiality, explainability, and model traceability, especially in client-facing tools.

- Advanced Industries & Manufacturing

These industries report 79% adoption and rely on AI for predictive maintenance, demand forecasting, and automation. Governance in this space often includes risk matrices tied to safety standards and supplier compliance tracking.

- Media and Telecom

Also at 79%, these companies use AI in content generation, ad targeting, and customer support. Governance efforts generally prioritize content transparency, bias mitigation, and user data protection.

- Consumer Goods and Retail

With 68% adoption, AI is helping businesses forecast trends, personalize shopping experiences with predictive recommendations, and manage supply chains. Governance here often includes customer data protections, consent tracking, and fairness in algorithmic recommendations.

- Financial Services

65% of firms in this sector use AI, particularly for credit scoring, fraud detection, and portfolio analysis. Many follow model risk management guidelines like SR-11-7, with formalized review processes, audit trails, and explainability tools baked into their compliance workflows.

- Healthcare, Pharma, and Medical Products

63% adoption reflects growing use of AI in diagnostics, drug development, and operational optimization. Governance in this sector often centers around patient safety, regulatory compliance, and clinical validation of AI models.

- Energy and Materials

Though adoption is lower at 59%, use cases like equipment monitoring and emissions forecasting are increasing. Governance here focuses on operational risk, model reliability, and environmental accountability.

Building a Responsible AI Governance Framework: A Step-by-Step Approach

For enterprise leaders, building an AI governance framework is both a leadership challenge and a strategic opportunity. The goal is to create a structure that aligns AI development with business objectives, risk management, and long-term trust.

1. Define Ownership and Governance Structure

Start by establishing who leads AI governance and how decisions get made. This typically includes forming a cross-functional governance committee with representatives from data science, IT, legal, compliance, product, and executive leadership. Assign clear roles for reviewing AI initiatives, handling escalations, and approving models for deployment. A documented RACI matrix is often useful here.

2. Align with Company Values and Risk Appetite

Your governance framework should reflect the organization’s ethical stance and tolerance for risk. For example, if you operate in healthcare or finance, your threshold for explainability and auditability may be higher. Make sure values like fairness, transparency, and accountability are not just words but are mapped to measurable guidelines that align with business strategy.

3. Map Current AI Use Cases and Risk Exposure

Conduct a company-wide inventory of AI and machine learning use cases, including those in development. Evaluate each use case based on impact, scale, automation level, and data sensitivity. A risk matrix helps prioritize where deeper governance controls are needed. This is also the right time to identify shadow AI (use cases developed outside formal channels) and bring them into scope.

4. Draft Ethical and Operational Guidelines

Translate abstract principles into actionable policies. This includes guidance for data selection, bias mitigation, model validation, human-in-the-loop requirements, and acceptable use. Consider publishing an internal AI Code of Practice to set a consistent standard across teams. Where possible, align this with external frameworks (e.g., OECD, ISO/IEC 42001) for credibility and future compatibility.

5. Develop an AI Compliance Roadmap

Regulatory requirements vary by geography and industry, and they’re evolving fast. Work with legal and compliance teams to create a roadmap covering key obligations like privacy (GDPR, CCPA), explainability, and human oversight. Include timelines for documentation, audits, and reporting mechanisms. This roadmap should be reviewed quarterly to stay ahead of changes.

6. Select Tools for Model Monitoring and Documentation

Invest in tools that support version control, performance monitoring, and explainability. Common choices include model cards, data sheets, and bias detection platforms. The goal is to make it easy for teams to document model intent, assumptions, limitations, and performance across environments. Tools should integrate into your existing MLOps stack for minimal disruption.

7. Train Teams and Embed Governance into Workflows

Governance is only effective if it’s adopted. Roll out training programs tailored to different roles – data scientists, product managers, legal, and executive sponsors. Focus on how governance fits into existing workflows, not just what the rules are. Encourage teams to raise risks early and document how decisions are made. This helps shift culture from reactive to proactive.

8. Review Regularly and Evolve with AI Maturity

AI governance isn’t a one-off project. Set a cadence for reviewing the framework, typically every 6 to 12 months. Track key metrics like model approval times, audit findings, and incident reports to evaluate how well the framework is working. As your AI maturity grows, refine your policies, expand tooling, and revisit risk thresholds.

Tools & Technologies That Support AI Governance

Governance frameworks need the right tools to stay consistent and scalable. These technologies streamline oversight, increase visibility, and reduce manual overhead:

Model Documentation Tools

Tools like model cards and datasheets help standardize how models are described and evaluated. They improve cross-team communication and support audit readiness.

Bias Detection and Explainability Platforms

These tools test for bias, analyze fairness, and make model outputs easier to understand for both technical and non-technical stakeholders.

Workflow Orchestration and Audit Trail Tools

Automating governance tasks ensures consistency. Platforms that track who accessed what, when, and why support accountability and reduce compliance gaps.

Governance Dashboards and Policy Management Software

Real-time dashboards track policy adherence, flag anomalies, and provide a central hub for managing governance policies across teams and projects.

GEM Corporation: Your AI Governance and Enablement Partner

Partnering with the right team can simplify the complexity of AI governance, especially when internal resources are stretched or regulations are unclear. GEM Corporation brings strong technical expertise and real-world experience to help enterprises build, scale, and govern AI responsibly.

Since 2014, GEM has supported Fortune 500 companies and fast-growing startups across Japan, Asia-Pacific, and Europe. With 400+ IT professionals, we focus on delivering secure, high-impact solutions with speed, quality, and accountability.

Our AI services cover the full lifecycle, from strategy to deployment, backed by certifications like ISO/IEC 27001:2022, ISO 9001:2015, and CMMI Level 3. As an ISTQB Gold Partner and official consulting partner of Databricks and ServiceNow, GEM integrates quality governance into every engagement.

Conclusion

An AI governance framework shapes how AI is built, evaluated, and managed across the enterprise. It connects business values with oversight, supports regulatory alignment, and strengthens how teams work with data and models. From defining ownership to selecting the right tools, the steps covered in this guide give leaders a practical path forward. As AI adoption grows, staying structured and consistent becomes more important. To move from planning to execution with the right support, contact GEM to explore how we can support your AI governance journey.

What are the most important components or "pillars" of a responsible AI framework?

The framework is built on several core pillars, including Organizational Structure & Roles (clear ownership), Ethical Principles & Human Oversight, Risk & Impact Assessment, Data & Model Governance, Transparency & Explainability, Compliance & Legal Alignment, and Stakeholder Engagement & Feedback Loops.

Why is it so important for enterprises to focus on AI compliance right now?

Compliance is vital because it reduces legal and reputational risk from issues like bias or data misuse. It also improves operational efficiency by providing clear processes, builds trust with customers, and allows for faster, safer deployment at scale because internal and external requirements have already been met.

Which global regulations should my enterprise be aware of when setting up governance?

You should pay attention to risk-based frameworks like the EU AI Act, data protection laws like GDPR (which also reinforces the right to explanation for AI outcomes), and industry-specific guidance such as the U.S. financial sector's SR-11-7 on model risk management. Global standards like the NIST AI RMF also offer practical guidance.

What is the first practical step we should take to start building this framework?

The very first step is to Define Ownership and Governance Structure. This means establishing who leads AI governance (e.g., forming a cross-functional committee with members from legal, IT, and data science) and assigning clear roles for reviewing and approving new AI initiatives and models.