Contents

- What is Cloud Computing?

- What is Edge AI?

- Edge AI vs Cloud AI: Distinct Functions, Shared Objectives

- Cloud Computing Role in Edge AI Workflows

- How Cloud–Edge AI Is Applied Across Industries

- Considerations for Cloud and Edge AI Integration

- Approaching Cloud–Edge AI Synergy with a Structured Strategy

- Conclusion

As AI systems are increasingly deployed in environments demanding real-time responsiveness, from autonomous vehicles to industrial sensors, the infrastructure behind them is evolving. According to Gartner, by 2025, nearly 75% of enterprise-generated data will be created and processed outside centralized data centers. This shift is accelerating investment in edge AI, where inference occurs closer to the data source.

At the same time, the cloud remains a core environment for model design, training, and orchestration. Understanding the cloud computing role in edge AI is key to building scalable, responsive systems that meet operational requirements. This article examines how cloud and edge environments interact, where each adds value, and what organizations must consider when aligning the two for intelligent execution.

What is Cloud Computing?

Cloud computing, in the context of AI development, refers to the use of remote infrastructure to support the design, training, and deployment of models at scale. It offers centralized processing power, allowing teams to work with large datasets and computationally intensive algorithms without relying on local hardware. This centralized architecture supports consistency, version control, and collaborative development across distributed teams.

Cloud platforms also provide flexible storage capacity, which is necessary for managing structured and unstructured data used in model training. With global access, development teams can deploy AI services across regions and adapt to variable demand without physical infrastructure constraints.

These characteristics make cloud computing a foundational layer for AI workflows, especially during the pre-deployment and orchestration phases.

What is Edge AI?

Edge AI refers to the execution of machine learning models directly on devices located near the data source, such as industrial sensors, surveillance cameras, mobile devices, or IoT gateways. These models run locally, allowing AI systems to perform inference without sending data to a centralized cloud environment. This setup supports faster response times, lowers reliance on network availability, and offers more control over sensitive information.

Edge AI is particularly relevant in scenarios where latency, bandwidth, or data sovereignty are operational constraints. In manufacturing, for example, edge-based vision systems can detect defects on production lines in real time. In autonomous vehicles, decision-making must occur within milliseconds, something that cloud-based processing cannot reliably support. As hardware becomes more capable, edge AI is expanding its footprint across industries that require immediate insights and localized action.

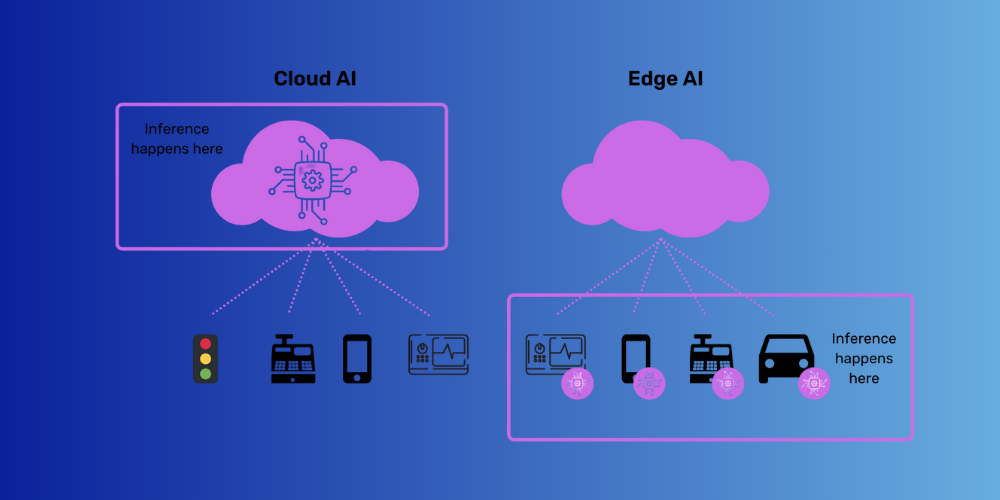

Cloud AI and edge AI operate at different stages of the AI lifecycle and address different technical and operational needs.

Cloud environments are well-suited for data aggregation, model training, and coordinated system management. They provide the computing infrastructure needed to process large datasets, experiment with model architectures, and monitor deployed systems at scale. These capabilities support strategic planning and ongoing model refinement.

Edge environments, in contrast, focus on execution, specifically, running inference on pre-trained models in real time. They are designed to deliver low-latency performance and operate independently of constant cloud connectivity. This makes them effective in disconnected or high-throughput settings where immediate action is required.

Rather than functioning in isolation, cloud and edge AI systems are increasingly designed to operate in tandem. The cloud supports development, model distribution, and lifecycle governance, while the edge handles real-time detection, classification, and decision-making. This division of roles allows organizations to balance central control with local autonomy, an architecture that supports both agility and oversight as AI adoption scales.

Cloud Computing Role in Edge AI Workflows

The cloud computing role in edge AI has expanded from being a support function to serving as the core infrastructure for model development, deployment coordination, and continuous system improvement across distributed environments.

Cloud as the Training Ground

Cloud infrastructure plays a central role in the early phases of AI development. It provides the computing scale and data storage that AI training demands, especially for models built on high-volume, multi-source datasets. These environments allow teams to iterate rapidly and collaborate across locations while maintaining rigorous version control.

Key capabilities of cloud platforms in this stage include:

- High compute availability for training machine learning models

- Access to consolidated datasets for broader model generalization

- Centralized environments for collaboration and model lifecycle management

- Scalable storage for labeled data, historical logs, and retraining archives

These features allow organizations to move from experimentation to production with greater consistency and control.

Edge as the Decision Maker

Following cloud training, models are distributed to edge environments to be executed. Artificial Intelligence (AI) systems may react in milliseconds and function without constant cloud access since inference takes place directly on devices that are near.

Edge AI is particularly effective in the following scenarios:

- Real-time inference is needed to support autonomous or time-sensitive functions

- Network bandwidth is constrained or unreliable

- Data cannot be sent to the cloud due to regulatory, privacy, or operational limits

- Processing must continue offline or in remote locations

This localized execution structure supports faster decision-making while minimizing reliance on centralized infrastructure.

Collaboration for Enhanced Performance

The interaction between cloud and edge environments creates a distributed architecture that supports both scale and adaptability. While each environment serves a distinct function, their integration strengthens overall system performance.

Benefits of the cloud-edge collaboration include:

- Scalable rollout of models with centralized control

- Continuous learning loops, where edge-generated data informs model updates in the cloud

- Improved data handling by filtering or anonymizing inputs locally before cloud transmission

In practice, many key AI lifecycle activities remain cloud-centric:

- Data labeling and annotation workflows

- Model retraining based on edge-derived feedback

- Performance monitoring and system diagnostics

- Governance and compliance reporting

Using this model, organizations can develop centrally, deploy locally, and improve continuously within a single, coordinated framework.

How Cloud–Edge AI Is Applied Across Industries

As investment in AI infrastructure moves from experimentation to deployment, organizations are aligning cloud and edge capabilities to meet sector-specific demands. The cloud computing role in edge AI is reflected in how industries manage real-time decision-making while maintaining centralized control and model optimization.

Smart manufacturing

Manufacturers deploy edge AI on production lines to monitor equipment health, detect defects, and maintain operational continuity without human intervention. The cloud is used for training complex models using historical data from multiple plants, then distributing those models to edge devices for real-time inference.

Case: A precision engineering firm uses edge AI to detect microcracks in metal components during machining. The system flags anomalies instantly, reducing rework and scrap rates. Data from multiple production sites is aggregated in the cloud to identify systemic issues and update inspection models, allowing the firm to standardize quality control while adapting to localized manufacturing conditions.

Retail

Retailers use edge AI at physical stores to analyze shopper interactions, transaction speeds, and shelf engagement. These insights are processed locally to manage staffing, optimize layout, and trigger restocking. Cloud platforms consolidate this data across locations to support broader forecasting and campaign planning.

Case: A supermarket chain installs edge AI cameras at checkout lanes to measure queue length and customer flow. When wait times increase, alerts are sent to open new counters. At the same time, cloud-based analytics use this data to understand peak hours across regions, adjust workforce scheduling, and inform future store design decisions, improving both customer experience and operational efficiency.

Healthcare

Healthcare providers implement edge AI in diagnostic equipment to support faster, localized analysis, especially in remote or under-resourced facilities. Cloud systems handle model training using anonymized patient data, enabling consistent diagnostic support across networks.

Case: A clinic network uses edge AI within portable X-ray machines to identify signs of pneumonia. Technicians receive initial assessments within seconds, even without internet access. Once connected, anonymized diagnostic data is sent to the cloud, where it contributes to retraining models with broader datasets. This process improves diagnostic accuracy over time while maintaining patient privacy and enabling faster care delivery.

Smart Cities

City systems rely on edge AI to gather real-time data from traffic lights, air quality sensors, and surveillance infrastructure. The cloud serves as a central coordination point for long-term planning, policy simulations, and infrastructure optimization.

Case: A city transportation department deploys edge AI cameras at major intersections to monitor congestion and adjust signal timing dynamically. These systems respond instantly to changing traffic conditions. The cloud collects data from across the city to model traffic patterns and plan new routes or public transit schedules, improving flow and reducing emissions over time.

Energy

Utilities use edge AI to monitor distributed assets such as solar panels, wind turbines, and substations. Local devices make operational decisions in real time, while cloud systems oversee asset performance and coordinate energy distribution across the grid.

Case: An energy provider installs edge AI on solar inverters to detect anomalies and optimize energy output on-site. These devices operate autonomously during outages or low connectivity. Performance data is later synced to a cloud platform, where predictive models update maintenance schedules and forecast generation capacity. This results in higher uptime, reduced field service costs, and more accurate supply planning.

These examples illustrate how companies can achieve strategic control at scale while also acting effectively in the field by leveraging the combination of cloud and edge AI. This design not only facilitates automation but also supports continuous learning and adaptability, enabling businesses to address both immediate issues and long-term changes.

Considerations for Cloud and Edge AI Integration

Aligning cloud and edge systems into a cohesive AI infrastructure can be a more complex story than you expect. It requires deliberate planning across data, security, cost, and operational structure. As organizations scale deployments, the following considerations shape both performance and long-term sustainability.

- Data governance and latency trade-offs

AI systems operating across cloud and edge need clear rules for how data is collected, processed, and stored. Some data can be processed locally for speed or compliance reasons, while other datasets may need to be centralized for model retraining or regulatory audits. Decisions around where inference and aggregation happen directly affect latency, bandwidth usage, and legal exposure.

- Interoperability between cloud platforms and edge hardware

Mismatches between cloud services and edge components can create friction. Organizations must assess whether existing edge devices can support models developed in specific cloud environments, particularly when using proprietary tools or APIs. A lack of interoperability may slow deployment cycles or limit flexibility in choosing hardware and software partners.

- Model lifecycle management: versioning, rollback, and update frequency

AI models evolve over time, and managing that evolution across distributed environments requires structure. Businesses need systems that track model versions, automate rollback procedures when performance drops, and support scheduled or conditional updates to edge devices. Without this, inconsistencies across deployments can degrade performance or increase risk.

- Security strategy across distributed environments

Expanding AI to the edge increases the number of entry points for potential threats. A distributed security approach must account for device authentication, data integrity, and secure communication channels. Cloud-based oversight can support threat monitoring, but protection mechanisms must also be enforced locally where response times are shorter.

- Cost management between centralized and decentralized compute resources

Running inference at the edge may reduce cloud compute costs, but it introduces new expenses in device provisioning, local storage, and maintenance. Cloud training costs can also vary based on model complexity and retraining frequency. A balanced cost strategy should account for compute distribution, data transfer volumes, and infrastructure redundancy.

- Vendor and architecture lock-in risks

Relying heavily on a single cloud provider or proprietary edge infrastructure can limit future adaptability. Businesses that commit early to one ecosystem may face constraints when integrating new technologies or expanding into new markets. Designing with portability in mind, through open standards, modular architectures, or multi-cloud strategies, can preserve strategic options.

Integrating cloud and edge AI systems is a continuous process shaped by trade-offs, policy decisions, and evolving business needs. Organizations that treat these considerations as part of their AI operating model, rather than as one-time implementation tasks, will be better positioned to scale with confidence.

Approaching Cloud–Edge AI Synergy with a Structured Strategy

Achieving a functional relationship between cloud and edge environments is not a matter of connecting two systems; it requires a unified architecture that accounts for infrastructure, model lifecycle, and operational logic. Businesses looking to scale AI across distributed environments can benefit from a deliberate approach that addresses technical integration and organizational readiness. The following principles form a practical foundation.

- Design around the use case, not the infrastructure

Many deployments begin by selecting tools or platforms before clarifying the business outcome. A more effective approach starts with defining the operational need, whether it’s faster decision-making at the edge, centralized model governance, or continuous feedback loops. Technical design can then follow, with decisions on where to place inference, store training data, and manage version control driven by the use case. This reduces unnecessary complexity and aligns performance with measurable goals.

- Plan for system-wide governance and iteration

Cloud–edge AI is not a static deployment. It involves constant updates, feedback integration, and performance monitoring across both environments. Early planning should include how models will be retrained, how updates will propagate to edge locations, and how inconsistencies will be resolved. Establishing these processes upfront avoids fragmented operations and allows teams to make adjustments without disrupting service continuity.

- Engage with a partner that understands both dimensions

Bridging cloud and edge requires experience in both environments, along with the ability to build scalable systems that serve multiple stakeholders. GEM Corporation brings proven experience in AI model development, cloud infrastructure, and edge deployment. We support clients not only in system design but also in assessing data readiness, evaluating integration points, and defining governance that aligns with business goals. This comprehensive view allows us to structure solutions that perform reliably across distributed contexts.

GEM is a global IT services and consulting company dedicated to helping organizations build future-ready systems through advanced engineering and strategic advisory services. With over 10 years of experience, GEM has delivered cloud-based and AI-driven solutions across various industries, including retail, healthcare, manufacturing, and energy. Our teams specialize in designing and deploying systems that integrate cloud-native development, multi-cloud management, and managed cloud services. We offer a full spectrum of AI capabilities, ranging from generative models and predictive analytics to computer vision and intelligent automation.

Conclusion

The cloud computing role in edge AI lies in coordinating data, managing models, and sustaining learning across environments. Successful integration depends on thoughtful planning, operational orchestration, and alignment with business goals from the outset. Without this structure, edge deployments may face inconsistency, limited adaptability, or stalled progress.

A capable partner can help navigate both the technical and strategic layers, bringing experience in cloud systems and AI deployment across distributed settings. GEM Corporation supports this journey with a balanced approach that connects immediate execution with long-term architectural strength. To explore how a tailored cloud–edge AI architecture can support your business goals, contact GEM’s team of experts for a focused consultation.